- Top-Down

- eliminating left recursion

- left factoring

- recursive descent

- computing first and follow

- LL(1)

- Bottom-Up

- Shift-reduce method

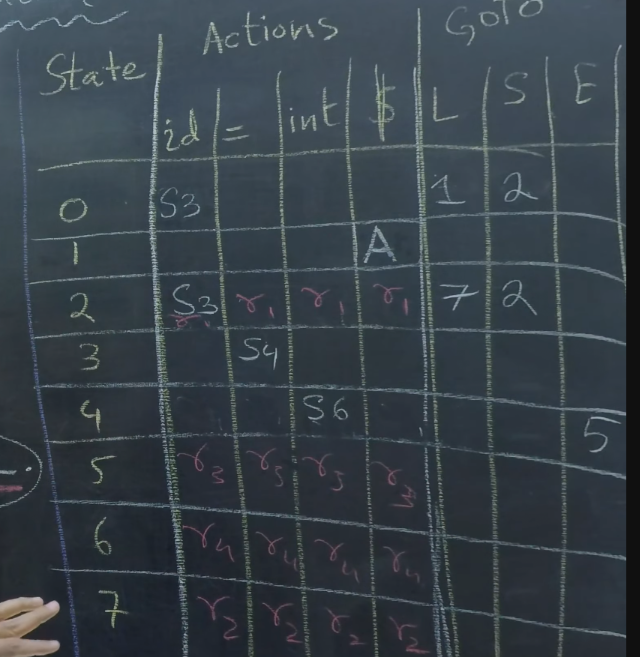

- LR(0)

- SLR

- CLR

- LALR

Top Down Parsing

Eliminating Left Recursion

replace

E : E + T | T

with

E : TE'

E' : +TE' | eps

Left Factoring

replace

S : if (expr) S else S

| if (expr) S

| other

with (take common part out)

S : if (expr) S S'

| other

S' : else S

| eps

Recursive Descent

import re

def tokenize(expression):

"""

look for numbers or brackets or operators

"""

token_pattern = r"(\d+|[()\^+\-*/])"

tokens = re.findall(token_pattern, expression)

return tokens

# parse functions for each non terminal

def parse_factor(tokens):

"""

F -> (E) | number

"""

token = tokens.pop(0)

if token == "(":

result = parse_expression(tokens)

# HACK: prolly should check below statement

tokens.pop(0) # get rid of )

return result

else:

return float(token)

def parse_exponent(tokens):

"""

X -> F ^ X | F

"""

left = parse_factor(tokens)

while tokens and tokens[0] == "^":

op = tokens.pop(0)

right = parse_exponent(tokens)

if op == "^":

left **= right

return left

def parse_term(tokens):

"""

T -> X*T | X/T | X

"""

left = parse_exponent(tokens)

while tokens and tokens[0] in "*/":

op = tokens.pop(0)

right = parse_exponent(tokens)

# NOTE: backtracking happening here

# to eliminate start predicting shit (LL(1))

if op == "*":

left *= right

else:

left /= right

return left

def parse_expression(tokens):

"""

E -> T + E | T - E | T

"""

left = parse_term(tokens)

while tokens and tokens[0] in "+-":

op = tokens.pop(0)

right = parse_term(tokens)

if op == "+":

left += right

else:

left -= right

return left

def evaluate_expression(expression):

tokens = tokenize(expression)

result = parse_expression(tokens)

return result

expr = "init"

while expr:

try:

print("parser: ", end='')

expr = input()

print(evaluate_expression(expr))

except EOFError:

print()

print("parser: exiting")

breakFirst and Follow sets

First :

"""

A := BC

"""

if eps not in first(B):

first(A) = first(B)

else if eps in first(B):

first(A) = (first(B) - {eps}) U (first(C))Follow:

"""

A := BC

"""

follow(C) = follow(A)

if eps not in follow(C):

follow(B) = first(C)

else if eps in follow(C):

follow(B) = (first(C) - {eps}) U (follow(A))-

compute first sets first

- go from bottom level production to top

-

then compute follow set

- go from top to bottom but might require multiple scans

-

examples: https://www.gatevidyalay.com/first-and-follow-compiler-design/

-

theory: https://cap.ecn.purdue.edu/compilers/lectures/Week3/lecture-3.3.pdf

LL(1) Grammars

- left to right leftmost derivation with 1 lookahead token

- A grammar is LL(1) iff whenever

A → α | βare two distinct productions, the following three conditions hold:- For no terminal

ado both α and β derive strings beginning witha. - At most one of α and β can derive the empty string.

- If β derives the empty string, then α does not derive any string beginning with a terminal in FOLLOW(

A).

Likewise, if α derives the empty string, then β does not derive any string beginning with a terminal in FOLLOW(A).

- For no terminal

for each production "A -> a":

for each terminal "a" in first("A"):

M["A"]["a"] = "A -> a"

if eps in first("A"):

for each "b" in follow("A"):

M["A"]["b"] = "A -> eps"example 1

S : +SS

| *SS

| a

\text{FOLLOW(S)} = \set{+, *, a, \}$

| Non terminal | a | + | * | $ |

|---|---|---|---|---|

| S | S → a | S → +SS | S → *SS |

example 2

S : (S)S

| eps

\text{FOLLOW(S)} = \set{\rparen, \}$

| ( | ) | $ | |

|---|---|---|---|

| S | S → (S)S | S → | S → |

- theory:

- practice:

Bottom Up Parsing

Shift-Reduce Method

- Shift: shift the next input symbol onto the top of the stack

- Reduce: the right end of the string to be reduced must be on the top of the stack. Locate the left end on the stack and replace with appropriate non-terminal

- Accept: announce successful completion of parsing

| stack | input | action |

|---|---|---|

| $ | a * a $ | shift |

| $ a | * a $ | reduce F → a |

| $ F | * a $ | reduce T → F |

| $ T | * a $ | shift |

| $ T * | a $ | shift |

| $ T * a | $ | reduce F → a |

| $ T * F | $ | reduce T → T * F |

| $ T | $ | reduce E → T |

| $ E | $ | accept |

- Bottom of stack always has $

- End of input always has $

CAUTION

not good with ambiguous grammars

- if you have time and want some more intuition on the automaton: https://blog.jeffsmits.net/lr-parsing-recursive-ascent/

LR Parsers (LR(0))

-

automaton: https://www.youtube.com/watch?v=BxMFn7aelBk

-

Items: productions that keep track of how much has been consumed

-

Closure: Include every possible production from a main production

-

Augmented Grammar: extra state to add an accept

LR(0) parsers

parsers don’t lookahead, so you reduce on every input token for a state

Simple LR Parsers

Now you are looking ahead one token. So you will only reduce when you encounter symbols in the follow set of the required non-terminal, instead of filling up every cell.

CLR Parsers

Example of LR(1) parser. So you make LR(1) automaton which includes a lookahead token derived from the first/follow sets

- peak: https://youtu.be/sh_X56otRdU?si=JH0TW6yPzWRzowZ3

- slightest touch of theory: https://cs.nyu.edu/~gottlieb/courses/2000s/2006-07-fall/compilers/lectures/lecture-07.html

similar to SLR parser but a bit more specific. Also takes a lot more memory and everyone hates it

LALR Parsers

- bruh: https://www.youtube.com/playlist?list=PLxP0p—aBHmJKqrSFBatFypgviqGRxMKOi

- you’re still wasting computation by doing the same stuff as CLR, but then you just combine rows and states to create a slightly smaller parsing table

- just use SLR atp

- also may lead to random fuckass conflicts on an originally non-conflicted grammar wow

its preferred over SLR just because of this lol